Multi-modal, Multilingual, and Multi-hop Medical Instructional Video Question Answering Challenge

held in NLPCC 2025

Learn more Download dataset

Designing models that can comprehend multi-modal (text, speech, and image/video) instructional video in the medical domain, process multilingual data, and locate multi-hop questions in the video is an emerging challenge. Following the successful hosts of the 1-st (NLPCC 2023 Foshan) and the 2-rd (NLPCC 2024 Hangzhou) CMIVQA challenges, this year, a new task has been introduced to further advance research in multi-modal, multilingual, and multi-hop medical (M4) question answering systems, with a specific focus on medical instructional videos. This task focuses on evaluating models that can integrate information from medical instructional videos, understand multiple languages, and answer complex, multi-hop questions that require reasoning over various modalities. Participants in M4IVQA are expected to develop algorithms capable of processing both video and text data, understanding multilingual queries, and providing relevant answers to multi-hop medical questions. Models will be evaluated on the relevance of their answers, as well as their ability to handle complex multi-modal and multilingual inputs.

The task consists of multiple stages, including training, testing, and evaluation, which contains three tracks: multi-modal, multilingual, and multi-hop Temporal Answer Grounding in Singe Video (M4TAGSV), multi-modal, multilingual, and multi-hop Video Corpus Retrieval (M4VCR) and multi-modal, multilingual, and multi-hop Temporal Answer Grounding in Video Corpus (M4TAGVC).

Fig. 1: Illustration of Multilingual Temporal Answer Grounding in Singe Video (mTAGSV).

Fig. 2: Multilingual Illustration of Video Corpus Retrieval (mVCR).

- Track 2. Multilingual Video Corpus Retrieval (mVCR): As shown in Fig. 2, given a medical or health-related question and a large collection of untrimmed bilingual medical instructional videos, this track aims to find the most relevant video corresponding to the given question in the video corpus.

Fig. 3: Illustration of Multilingual Temporal Answer Grounding in Video Corpus (mTAGVC).

- Track 3. Multilingual Temporal Answer Grounding in Video Corpus (mTAGVC): As shown in Fig. 3, given a text question and a large collection of untrimmed Chinese medical instructional videos, this track aims at finding the matching video answer span within the most relevant video corresponding to the given question in the video corpus.

The team constitution (members of a team) cannot be changed after the evaluation period has begun. Individuals and teams with top submissions will present their work at the workshop. We also encourage every team to upload a paper that briefly describes their system. If there are any questions, please let us know by raising an issue.

If there are any questions, please let us know by raising an issue.

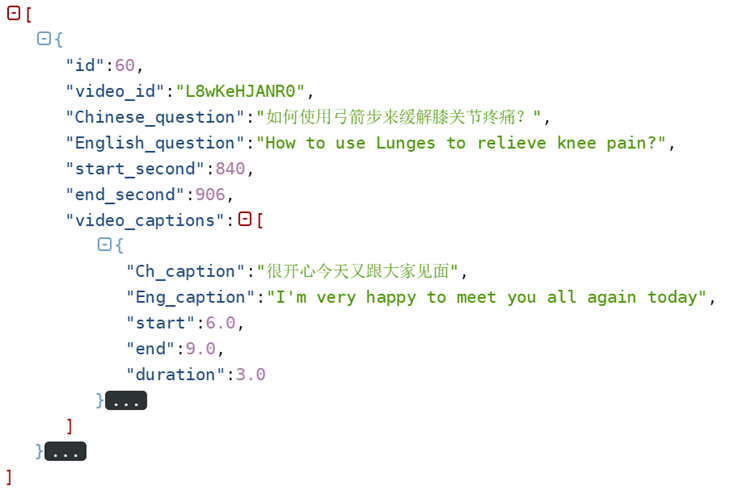

Fig. 4 Dataset examples of the MMIVQA shared task.

The videos for this competition are crawled from the medical instructional channels on the YouTube website, where the subtitles (Both in Chinese and English) are obtained from the corresponding video. The question and corresponding temporal answer are manually labeled by annotators with the medical background. Each video may contain several questions-answer pairs, where the questions with the same semantic meanings correspond to a unique answer. The dataset is split into a training set, a validation set, and a test set. During the grand challenge, the test set along with the true “id” data number is not available to the public. The Fig. 4 shows the dataset examples for the mTAGV task. The “id” is the sample number that is used for the video retrieval track. The “video_id” means the unique ID from YouTube. The “Chinese_question” item is written manually by Chinese medical experts. The “English_question” is translated and corrected by native English-speaking doctors. The “start and end second” represents the temporal answer from the corresponding video. We also provide the video captions automatically generated from the video, including Chinese (Ch_caption) and English (Eng_caption) versions. As a result, our final goal is to retrieve the target video ID from the test corpus, and then locate the visual answer. More details about the dataset as well as the download links can be found in https://github.com/Lireanstar/NLPCC2024_MMIVQA. Our baseline method will be released in https://github.com/WENGSYX/CMIVQA_Baseline. Any original methods (language/vision/audio/multimodal etc.) are welcome.

Train Set: BaiduNetDisk

Test Set: BaiduNetDisk

Statistics

| Dataset | Videos | QA pairs | Vocab Nums | Ch_Question Avg. Len. | Eng_Question Avg. Len. | Video Avg. Len. | Train Set | 1228 | 5840 | 6582 | 17.16 | 6.97 | 263.3 |

| Dev Set | 200 | 983 | 1743 | 17.81 | 7.26 | 242.4 |

| Test Set | 200 | 1022 | 2234 | 18.22 | 7.44 | 310.9 |

Details

All the Train & Dev files include videos, audio, and the corresponding subtitles. The video and the corresponding audio come from Youtube Chinese medical channel, which is obtained by using Pytube tools. The subtitle are generated from the Whisper, which contains Simplified Chinese and Traditional Chinese tokens. In order to unify the character types of questions and subtitles, we converted the above both into simplified Chinese. As for competition benchmarks, we recommend references [1-2], [6] as strong baselines. Beginners can quickly learn about the content of relevant competitions through references [3-4]. The Test A set and baseline are released, and any original methods (language/vision/audio/multimodal etc.) are welcome.Guidelines

Terms and Conditions

The dataset users have requested permission to use the MMIVQA dataset. In exchange for such permission, the users hereby agree to the following terms and conditions:

- The dataset can only be used for non-commercial research and educational purposes.

- The dataset must not be provided or shared in part or full with any third party.

- The researcher takes full responsibility for the usage of the dataset at any time, and the use of the YouTube videos must respect the YouTube Terms of Service.

- The authors of the dataset make no representations or warranties regarding the dataset, including but not limited to warranties of non-infringement or fitness for a particular purpose.

- You accept full responsibility for your use of the dataset and shall defend and indemnify the Authors of MMIVQA, against any and all claims arising from your use of the dataset, including but not limited to your use of any copies of copyrighted videos that you may create from the dataset.

- You may provide research associates and colleagues with access to the dataset provided that they first agree to be bound by these terms and conditions.

- If you are employed by a for-profit, commercial entity, your employer shall also be bound by these terms and conditions, and you hereby represent that you are authorized to enter into this agreement on behalf of such employer.

The evaluation metrics of this challenge will be quantitative evaluated from the following perspectives:

Track 1

- Multilingual Temporal Answer Grounding in Singe Video:

We will evaluate the results using the metric calculation equation shown as follows. Specifically, we use (1) Intersection over Union (IoU), and (2) mIoU which is the average IoU over all testing samples. Following the previous work [3]-[5], we adopt “R@n, IoU = μ”, and “mIoU” as the evaluation metrics, which treat localization of the frames in the video as a span prediction task. The “R@n, IoU = μ” denotes the Intersection over Union (IoU) of the predicted temporal answer span compared with the ground truth span, where the overlapping part is larger than “μ” in top-n retrieved moments. The “mIoU” is the average IoU over the samples. In our experiments, we use n = 1 and μ ∈ {0.3, 0.5, 0.7} to evaluate the TAGSV results. $$ \begin{aligned} \mathrm{IOU} & =\frac{A \cap B}{A \cup B} \\ \mathrm{mIOU} & =\left(\sum_{i=1}^N \mathrm{IOU}\right) / N \end{aligned} $$ where A and B represent different spans, N = 3.

Note: The main ranking of this track is based on the mIoU score, and other metrics in this track are also provided for further analysis.

Track 2

- Multilingual Video Corpus Retrieval

Following the pioneering work [6], we adopt the video retrieval metric like “R@n”. Specifically, we adopt the n=1, 10, and 50 to denote the recall performance of the video retrieval. The Mean Reciprocal Rank (MRR) score to evaluate the Chinese medical instructional video corpus retrieval track, which can be calculated as follows. $$ M R R=\frac{1}{|V|} \sum_{i=1}^{|V|} \frac{1}{\operatorname{Rank}_i} $$ where the |V| is the number of the video corpus. For each testing sample Vi, the Ranki is the position of the target ground-truth video in the predicted list.

Note: The main ranking of this track is based on the Overall score. The Overall score is calculated by summarizing the R@1, R@10, R@50 and MRR scores, which is shown as follows. $$ \text { Overall }=\sum_{i=1}^{|M|} {\text { Value}_i} $$ where the |M| is the number of the evaluation metrics. Valuei is the i-th metric in the above metrics (R@1, R@10, R@50 and MRR), |M|=4.

Track 3

- Multilingual Temporal Answer Grounding in Video Corpus:

We kept the Intersection over Union (IoU) metric similar to the Track 1 and the retrieval indexes “R@n, n=1/10/50” and MRR similar to Track 2 for further analysis. The “R@n, IoU = 0.3/0.5/0.7” is still used, where we assign the n = 1, 10, 50 for evaluation. The index of mean IoU in video retrieval subtask, i.e., “R@1/10/50|mIOU”, is also adopted for measuring the average level of participating model’s performance.

Note: The main ranking of this track is based on the Average score. The Average score is calculated by averaging the R@1|mIoU, R@10|mIoU, R@50|mIoU scores, which is shown as follows. where A and B represent different spans.

$$ \text { Average }=\frac{1}{|M'|} \sum_{i=1}^{|M'|} \frac{1}{\text { Value}_i} $$ where the |M'| is the number of the evaluation metrics. Valuei is the value of the i-th metric (i.e., R@1|mIoU, R@10|mIoU, R@50|mIoU), |M'|=3.

Signup to receive updates: using this form

The submission deadline is at 11:59 p.m. of the stated deadline date (UTC/GMT+08:00).

During the training and verification phases, we released the complete data set (including corresponding labels) for the participating teams to freely choose their models. During the test set release phase, we will release additional test sets and will maintain the frequency of updating the online list every day. Participants can submit their results to the competition organizer via E-mail for result registration, and each team does not exceed 5 submissions in total.

| Announcement of shared tasks and Registration open for participation | Feb. 17, 2025 |

| Release of detailed task guidelines & training data | Feb. 28, 2025 |

| Registration deadline | March 25, 2025 |

| Release of test data | April 11, 2025 |

| Participants results submission deadline; | April 20, 2025 |

| Evaluation results release and call for system reports and conference paper | April 30, 2025 |

Shoujun Zhou

Shenzhen Institute of Advanced Technology, Chinese Academy of Sciences

Bin Li

College of Electrical and Information Engineering, Hunan University

Shenxi Liu

School of Computer Science and Technology, Beijing Institute of Technology

Yixuan Weng

Westlake University